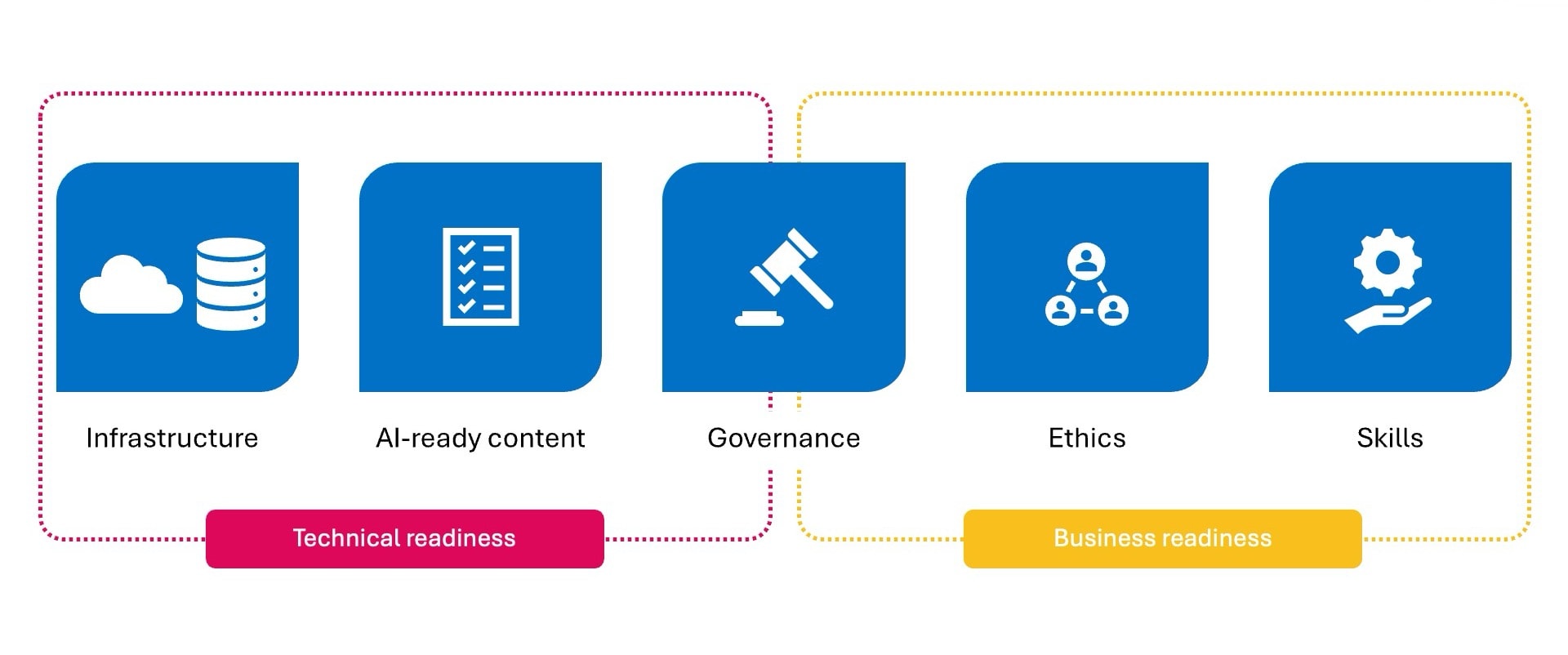

2. AI-ready content

Having AI-ready content is another technical hurdle to clear for being ready for AI. Content needs to be curated for high-quality AI output.

“There are layers to developing the appropriate corpus of data,” Davis said. “Each piece of a document needs to be broken out and sent for training. This creates embeddings that are used downstream in the gen AI space. It’s a process that organizations need to understand and be ready for.”

For example, if an HR department were readying its content for an AI-powered platform, it might identify certain records with bad labels or poor tagging to leave out. Once the quality content is loaded in, the model’s algorithm will run, the team can train the system by ranking output and the AI starts to understand what the content intentions are. Then, it can start improving on its own.

3. Governance

Governance overlaps both states of technical readiness and business readiness. Organizations have a great responsibility when it comes to governing AI. From monitoring data access and detecting malicious incursions to ensuring responsible AI practices throughout the organization, having strict standards enables organizations to implement AI safely and securely.

When incorporating AI into products and daily operations, organizations should develop clear guidelines for product teams and employees to mitigate AI-related risks in different aspects of the business.

An AI council can also help oversee the incorporation and implementation of AI, and ensure that guidelines reflect technological advancements and law changes.

Adhering to an organization's security and compliance standards is essential. Given AI's heavy reliance on data, having robust policies and the right technical tools in place provide a strong foundation for a secure AI implementation.

4. Ethics

“Ethics is something we take very seriously,” Davis said. “You need to have an ethical foundation in place — it’s critical to deliver on responsible AI.”

Davis said ethics is a common point of concern among customers and in RFPs. Honesty, bias and explainability are all facets of this component of business readiness.

“If an AI engine is going to make a decision or a recommendation, customers need to be able to understand how it came to that conclusion and what benchmarks and evaluations are showing these conclusions as accurate,” Davis said. “Being ready from an ethics standpoint means having guardrails in place.”

Hyland’s AI standards include transparency, data ownership, honesty, verifiable results, privacy and security, and governance.

AI-ready businesses can support quality AI outputs with ethical data, as well as monitor for things like bias. AI models also need to be able to defend against situations in which users might try to use disingenuous prompts to receive information they shouldn’t have access to.

The implications are very real for many industries, notably financial services, insurance and higher ed. From historical redlining practices in lending to fraudulent insurance claims and student evaluations, the stakes are high, and the data that feeds an AI model must be protected against bias and tainted data.

5. Skills

With AI capabilities popping up across new and familiar technologies in every industry, organizations can’t fully realize their AI ambitions without the right people to take them to the finish line. The competition for AI skills talent is fierce and has created a talent gap ranging from engineering and data scientists to business users who need leverageable AI know-how. Organizations are eager to bring on highly trained faces, but AI experts point to upskilling as another route.

“We believe everyone in the organization needs to be leveled up from a knowledge perspective on AI,” Davis said. “We're going to see businesses focus more on budgets, as well as the effort and time it takes to level up their employees, so they are able to take advantage of AI and use it to get an ROI.”